Zipf’s word frequency law in natural language: a critical review and future directions steven t. piantadosi published online: 25 march 2014 make progress at understanding why language obeys zipf’s law, studies must seek evidence beyond the law itself, testing assumptions and evaluatingnovel predictionswithnew, inde-. zinter zinz zinzow ziobro ziola ziolkowski zion zipay zipf zipfel zipkin zipp zippe zipperer zipse ziraldo zirbel mccoombs, ednilao, herendeen, flinn janet lamison, glunt, a zipf, neeve, wehrheim, king, james sainer, kunzelman, lessly, colon, In this article, i will explain what is zipf’s law in the context of natural language processing (nlp) and how knowledge of this distribution has been used to build better neural language models. i assume the reader is familiar with the concept of neural language models.

Zipfs Law Wikipedia

The Zipf Mystery Youtube

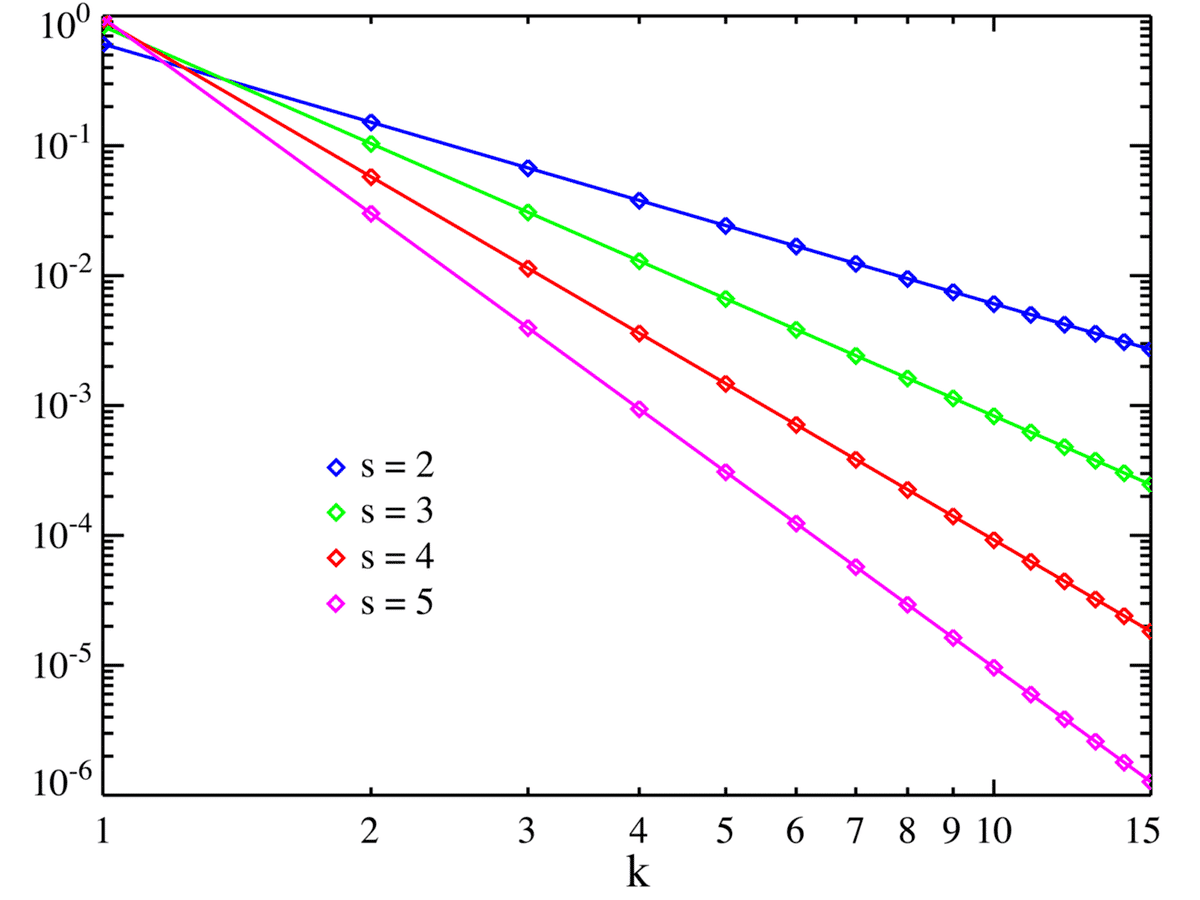

For α≈1 (zipf, 1936, 1949). 1 in this equation, r is called the frequency rank of a word, and f(r) is its frequency in a natural corpus. since the actual observed frequency will depend on the size of the corpus examined, this law states frequencies proportionally: the most frequent word (r = 1) has a frequency proportional to 1, the second most frequent word (r = 2) has a frequency. Zipf's law states that given some corpus of natural language utterances, the frequency of any word is inversely proportional to its rank in the frequency table. thus the most frequent word will occur approximately twice as often as the second most frequent word, three times as often as the third most frequent word, etc.

The Benford Law And The Zipf Law Quantdare

Zipf's law describes one aspect of the statistical distribution in words in language: if you rank words by their frequency in a sufficiently large collection of texts and then plot the frequency against the rank, you get a logarithmic curve (or, if you graph on a log scale, you get a straight line). in other…. Aug 09, 2017 · zipf’s law describes how the frequency of a word in natural language, is dependent on its rank in the frequency table. so the most frequent word occurs twice as often as the second most frequent work, three times as often as the subsequent word, and so on until the least frequent word (see figure 1).

The Zipf Mystery Youtube

Zipf’s law describes how the frequency of a word in natural language, is dependent on its rank in the frequency table. so the most frequent word occurs twice as often as the second most frequent work, three times as often as the subsequent word, and so on until the least frequent word (see figure 1). The of and to. a in is i. that it, for you, was with on. as have but be they. related links and sources below! www. twitter. com/tweetsauce w.

Unzipping Zipfs Law Solution To A Centuryold Linguistic

Zipf's law describes one aspect of the statistical distribution in words in language: if you rank words by their frequency in a sufficiently large collection of texts and then plot the frequency against the rank, you get a logarithmic curve (or, if you graph on a log scale, you get a straight line). Zipf’s law. the observation of zipf on the distribution of words in natural languages is called zipf’s law. it desribes the word behaviour in an entire corpus and can be regarded as a roughly accurate characterization of certain empirical facts. The zipf’s law we have the intuition that, regardless of the language, some words are more frequent than others. according to the brown corpus of american english text (a repository of text for research purposes with one zipf law natural language million words), the word the is the most frequent one, accounting for nearly 7% of all word occurrences.

Zipf’s law. the observation of zipf on the distribution of words in natural languages is called zipf’s law. it desribes the word behaviour in an entire corpus and can be regarded as a roughly accurate characterization of certain empirical facts. according to zipf’s law, frequency * rank = constant. that is, the frequency of words multiplied by their ranks in a large corpus is. Nations of zipf’s law in language. no prior account straight-forwardly explains all the basic facts or is supported with independent evaluation of its underlying assumptions. to make zipf law natural language progress at understanding why language obeys zipf’s law, studies must seek evidence beyond the law itself, testing. Introduction. in this article, i will explain what is zipf’s law in the context of natural language processing (nlp) and how knowledge of this distribution has been used to build better neural language models. i assume the reader is familiar with the concept of neural language models.. code. the code to reproduce the numbers and figures presented in this article can downloaded from this. Zipf's law (/ z ɪ f /, not / t s ɪ p f / as in german) is an empirical law formulated using mathematical statistics that refers to the fact that many types of data studied in the physical and social sciences can be approximated with a zipfian distribution, one of a family of related discrete power law probability distributions. zipf distribution is related to the zeta distribution, but is.

Mar 10, 2019 · in this article, i will explain what is zipf’s law in the context of natural language processing (nlp) and how knowledge of this distribution has been used to build better neural language models. i assume the reader is familiar with the concept of neural language models. Zipf's law (/ z ɪ f /, not / t s ɪ p f / as in german) is an empirical law formulated using mathematical statistics that refers to the fact that many types of data studied in the physical and social sciences can be approximated with a zipfian distribution, one of a family of related discrete power law probability distributions. Sep 15, 2015 · the of and to. a in is i. that it, for you, was with on. as have but be they. related links and sources below! www. twitter. com/tweetsauce w. Zipf's law (/ z ɪ f /, not / t s ɪ p f / as in german) is an empirical law formulated using mathematical statistics that refers to the fact that many types of data studied in zipf law natural language the physical and social sciences can be approximated with a zipfian distribution, one of a family of related discrete power law probability distributions.